Turnitin and detection of AI-generated text

Many of you already know that Turnitin, the company that provides us with the similarity-checking system known to us by the same name, launched its AI detection tool on 4 April 2023. Some of you have already used it. Many of you have inquired about the detector’s capabilities and asked why it wasn’t working in some cases. I’ll try to address the issues I’ve run into during the last month regarding this tool.

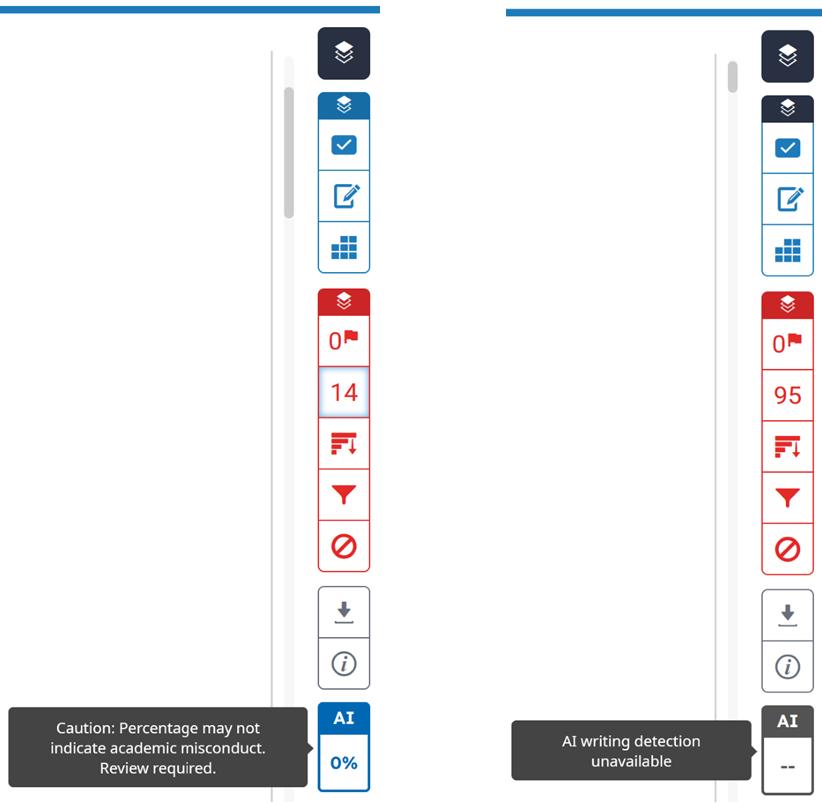

First, does the availability of this tool in Turnitin’s Feedback Studio, the interface for accessing student submissions in Turnitin, require teachers to take time to learn how to use it? No. The tool is there, available as an additional button along with the familiar feedback-giving and similarity report buttons (see the figure below). Simply click on the AI button to get the report in the form of a percentage stating the amount of text estimated to be AI-generated along with this suspect text highlighted, just as in the similarity report. This report is opened in a new Feedback Studio tab and is not directly available in MyCourses, Aalto University’s learning management system, just like the similarity report isn’t directly available either. However, teachers and administration staff will have to prepare themselves to handle the inevitable cases of suspected misuse of AI in writing. Read on to learn what this means.

Next, some relevant information that Turnitin provides on its website. Turnitin states [1], [5] that when building their detector, they chose to “detect the presence of AI writing with 98% confidence and a less than one per cent false-positive rate” in their “controlled lab environment” and that they have been “very careful to adjust detection capabilities to minimize false positives and create a safe environment to evaluate student writing for the presence of AI-generated text”. They are very confident that it is accurate: “If we say there’s AI writing, we’re very sure there is. Our efforts have primarily been on ensuring a high accuracy rate accompanied by a less than 1% false positive rate, to ensure that students are not falsely accused of any misconduct”. They do acknowledge that the detector may be wrong, and they give tips on how to address situations of false positives [2], which I repeat here concisely for convenience.

- Be prepared for the eventuality of having to face the case of a student being wrongly flagged for having submitted AI-generated text. Plan the conversation you will have with the student.

- Give the student the benefit of the doubt.

- Have an honest and open conversation with the student, acknowledging the possibility of a false positive.

Turnitin provides resources to help both teachers and students address these situations [3]. The principles I listed above and those in the resources are in line with Aalto University’s Academic Code of Integrity [4]. Until Aalto’s guidelines on the acceptable and unacceptable use of AI in coursework are published, this is a good way of dealing with cases of suspected misconduct in this area.

Finally, some insights and facts I picked up that I want to share. I attended a couple of webinars about Turnitin’s AI detector and resolved a few AI-detector-related issues that our teachers at Aalto have run into through which I learned a few things about the technical aspects of the detector. The details of most of the items listed below are discussed in Turnitin’s FAQ on the detector [5].

- The vast pool of submissions in Turnitin’s database was used to fine-tune the detector. This has probably taught the detector to better identify what students’ texts ‘look’ like.

- The analysis begins by looking at blocks of text containing several sentences and is then taken down to the sentence level. This is the reason you will see gaps between sentences in the highlighted text identified as that generated by AI.

- Sentences are analysed using a language model, and so the detector expects to find structures that appear in sentences. What this means is that, among other things, lists containing items without sentence structure may be ignored in the analysis.

- Turnitin is unable to distinguish between AI text proposed by language-check services like Grammarly and AI text generated by language model services like ChatGPT. So, if used as is, Turnitin will probably flag text produced by Grammarly as being produced by AI, because it is, which may come as a surprise to both students and teachers.

- The size of the document analysed should contain at least 150 words and is limited to 15000 words. When the word count exceeds this limit, the detector appears as not being available to the teacher. The limitation is probably due to the heavy computational resources the analysis requires. The file size is limited as are the accepted file formats.

- Students don’t see the AI tool in their view in Feedback Studio.

- For now, the AI detection works only for texts in English.

I hope this information sheds some light on the workings of the detector, and more importantly, on how to face possible challenges that are being thrown at us by AI. I also wish that our teachers are able to turn these challenges into golden opportunities for our students to learn.

References

[5] Frequently Asked Questions, Turnitin’s AI Writing Detection Capabilities.