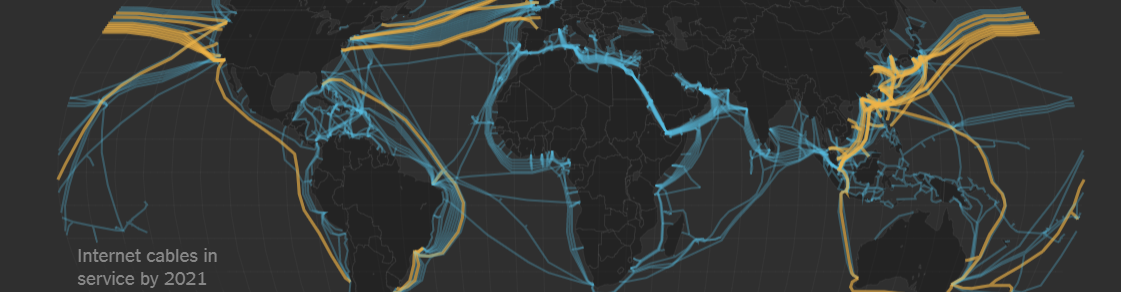

I was excited about the large scale of media infrastructures mentioned in the introduction chapter and how things are connected but hard to see. The connection between web and biophysical world is easy to forget. Where does all the small pieces of regular websites like cookies and such come from? Who provides them and why they exist? Are they hyper-objects?

In Autumn, I studied CAPTCHA systems for a project, especially Google’s reCAPTCHA, which is commonly used captcha program on the internet. Recaptcha is a fully automated web security program that developers can use for free to protect their sites. Recaptcha’s primary function is to determine whether a visitor of the page is a human (good) or a “robot” such as spamming bot (bad).

But Recaptcha – unlike captchas before – creates secondary value too.

Recaptcha challenges are made so that they employ visitors to create useful data for Google. Recaptcha has provided useful information to digitize old books, improve Google Maps and develop machine learning algorithms.

Different captchas. (Source: back40design.com.)

Some critics have seen a connection between Recaptcha and Google’s deal with U.S. Department of Defense to analyze drone footage. Manuel Beltran thinks that while solving Google’s captcha challenges, clueless people become labour to create data that helps the U.S. Army.

Another example of blind spot in the web for humans are trackers that collect information of users. Probably most commonly used web service that uses trackers is Google Analytics. When its tracker is placed within a website, the site sends data to Google’s server. That server is located somewhere – maybe in Hamina or maybe in the United States.

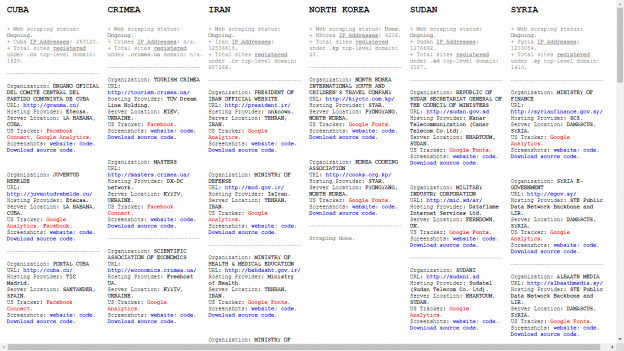

Screenshot of the project website Algorithms Allowed.

Artist Joana Moll investigated the usage of trackers in websites of countries that US is enforcing embargoes and sanctions including Cuba, Iran, North Korea, Sudan, Syria, and the Ukrainian region of Crimea. She scraped websites with the fitting domains of these countries.

She found some interesting use cases of US-company-owned trackers: President of Iran official website uses Google Analytics, Ministry of Defense of Iran uses Google Analytics, Ministry of Finance of Syria uses Google Analytics, and so on.

It is amusing that webmasters of these governmental websites let US corporations read data of their visitors. I argue that it happens because it is hard for people to understand that few lines of code in a website may mean something in a physical world too.

Link to Joana Moll’s project Algorithms Allowed (2017).